What are AI accelerators?

Published:

AI Accelerators Introduction

AI accelerator is a specialized hardware accelerator designed to accelerate Machine Learning and Artificial Intelligence applications, including neural networks and machine vision.

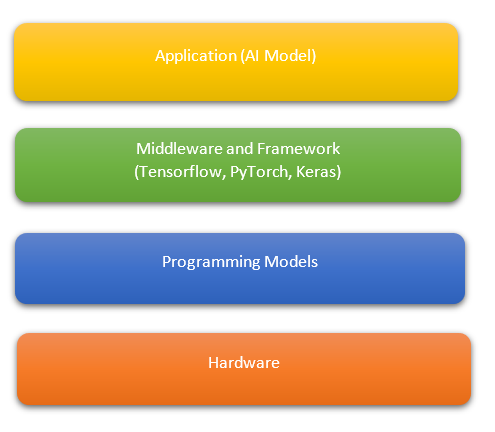

The datacenter chips that are running the Machine learning and Deep learning workloads, consist of a couple of CPU cores and the AI accelerators that are connected to these host CPUs and together as a system thy are supposed to work very efficiently either training a model or for high capacity inference.

CPU, GPU - Comparative study

Before answering that question, let’s just take a brief overview of CPUs and GPUs architecture. The specialized vector instructions that can simultaneously operate on multiple data are known as SIMD or single instruction multiple data for CPUs and SIMT or single instruction multiple threads for GPUs. A CPU core can execute instructions independently of the other cores. A server CPU typically have faster but fewer cores compared to a GPU, the parallelize code can benefit from the many CPU cores, the SIMD instructions benefit from single-core higher frequency performance. A CPU provides maximum flexibility and is typically simpler to program than other hardware, but this flexibility comes at expense of higher power consumption. Embarrassingly parallel workloads, with predictable memory access patterns donot require many of the capabilities of the CPU and a more dedicated processor should provide higher performance per watt.

GPUs are designed for large parallel task, a GPU core has to execute the same instruction as the other cores within its group. GPUs can typically offer higher training performance at lower energy for highly parallelize training workloads. Since GPU cores can provide higher energy efficiency that’s the main reason why GPUs are used for training and CPUs for inference. Inference has less parallelism and can benefit from higher CPU frequency.

Why AI Accelerators?

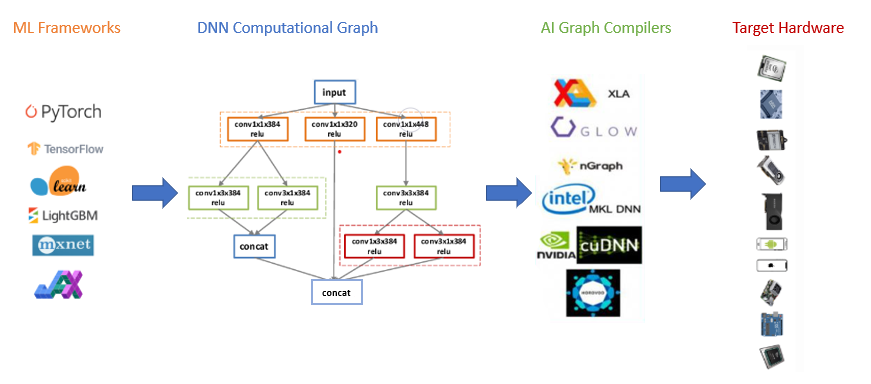

Now that we have established the core concepts of CPUs and GPUs, let’s talk about the need of AI accelerators. Dedicated AI processors have a large dedicated compute to general-compute ratio. They are ideal for workloads with predictable memory access patterns, as they can provide the highest performance per power when the data is properly pipelined to compute. GPUs originally architecture for graphics but because of parallelism that they have, they are pretty efficient to compare to using just CPUs.

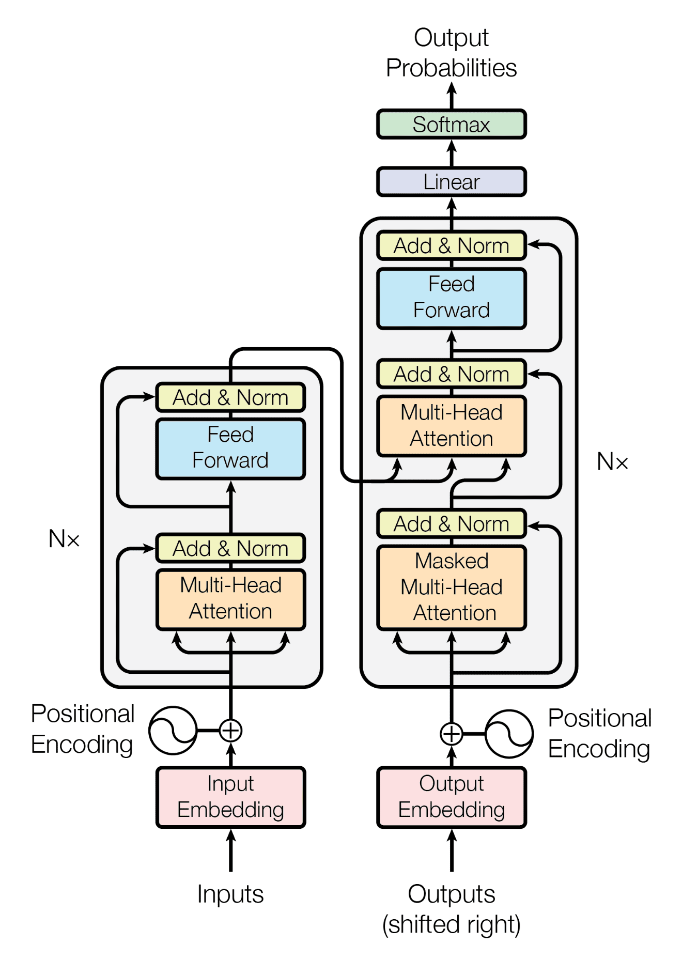

Since GPU is already an efficient machine for model training, what does AI accelerators do better? In neural networks, It’s either matrix-matrix multiplication or matrix-vector multiplication that needs to be done very efficiently. Hence, a matmul engine which is very efficient and a cluster of tensor processor cores with custom instruction set and AI centric operations boosts the performance overall. The combination of fully programmable tensor-cores and a very efficient matmul engine allows us to get to really high efficiency in terms of computing and memory resources.

Which hardware is best for neural networks?

While there are multiple different types of processors, they are becoming more heterogeneous and are actually beginning to share more common characteristics. Given the growing complexity of neural networks, the right processor is one that balances general purpose compute and specialized compute and scales well across nodes. In general, if you are working with variety of complex workloads or a starting point when exploring an AI solution, a more general purpose CPU processor especially one with built-in acceleration is likely the best choice.

If you require the highest performance per power with predictable memory access patterns, or you require something more specialized then a dedicated accelerator can likely be the best choice. And a vital consideration is that the processor is simple to program and to use.