What are Transformer models?

Published:

Transformer Model Introduction

A transformer model is a deep neural network that learns context and thus meaning by tracking relationships in sequential data like the words in this sentence. It adopts the mechanism of self-attention, differentially weighting the significance of each part of the input data. Like recurrent neural networks (RNNs), LSTMs, transformers are designed to process sequential input data, such as natural language, with applications towards tasks such as translation and text summarization. However, unlike RNNs that are slow to train and the input data needs to be passed sequentially, transformers process the entire input all at once in parallel.

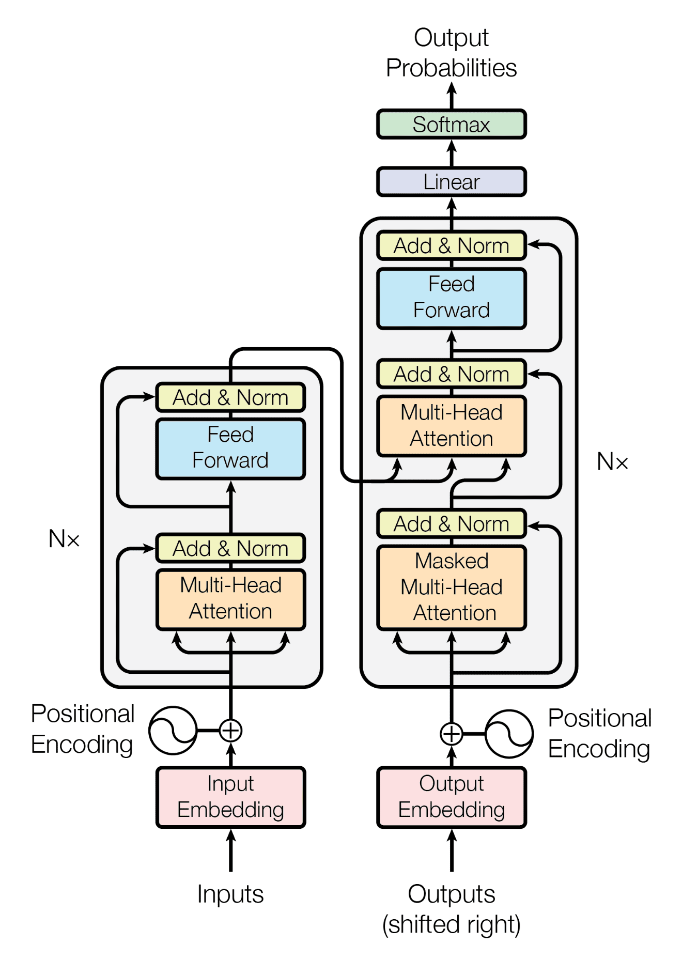

It applies an evolving set of mathematical techniques called attention or self-attention to detect subtle ways even distant data elements in a series depend on each other. Transformers were introduced in 2017 in the paper “Attention is All You Need” by a team at Google Brain and are increasingly the model of choice for NLP problems, replacing RNN models such as long short-term memory (LSTM). Transformer network architecture employs a encoder decoder architecture much like RNNs but, it allows to pass the input sequence in parallel which allow training parallelization allows on larger datasets. The task of the encoder is to map an input sequence to a sequence of continuous representations which is fed into decoder and the decoder receives the output of the encoder together with the decoder output at the previous time step to generate an output sequence.

Deep Dive into Transformer model blocks

Positional Encoding

First thing that we do is generate input embeddings of the text to map every word to a point in space where similar words are physically closer to each other. We could use pretrained embeddings of words to save time i.e. GLOVe, ELMO embeddings. The paper uses the sine and cosine function to generate the word embeddings. This embedding maps word to a vector, same word may have different meaning in different context in a sentence and this is where positional embedding comes into play. If we don’t use positional encoding then we are treating all words as bag of words instead of sequence of words. After having input embedding and applying the positional encoding we get the word vectors that have positional information i.e. context.

Attention in Machine Learning

Before getting into the details of the encoder block, let’s talk first about the concept of attention. Attention is the ability to dynamically highlight and use the salient parts of the information at hand, it involves answering what part of input should I be focusing on - in a similar manner as it does in human brain. It was introduced by Bahdanau et al. (2014) to address the bottleneck problem that arises with the use of a fixed-length encoding vector, where the decoder would have limited access to the information provided by the input. The attention mechanism described in the paper for RNNs is divided into three steps:

- Alignment scores: It takes the encoded hidden states ${h_i}$ and the previous decoder output, ${s_{i-1}}$ to compute a score ${e_{t,i}}$ that indicates how well the elements of the input sequence align with the current output at the position, t. The alignment model is represented by a function, which can be implemented by a feedforward neural network: ${e_{t,i}} = a({s_{t-1}}, {h_i})$

- Weights: The weights ${\alpha_{t,i}}$ are computed by applying a softmax operation to the previously computed alignment scores: ${\alpha_{t,i}} = softmax({e_{t,i}})$

- Context Vector: A unique context vector, ${c_t}$, is fed into the decoder at each time step. It is computed by a weighted sum of all, T, encoder hidden states: ${c_t} = \sum_{i=1}^{T} \alpha_{t,i}h_{i}$

Generalized Attention

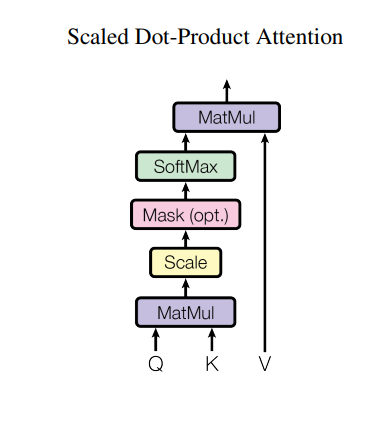

Self attention involves evaluating attention with respect to oneself, i.e. how relevant is the ith word in sentence relevant to other words in the sentence. The above attention mechanism can be generalized and can be seen as retrievel of a value $V_{i}$ for a query $Q_{i}$ based on a key $K_{i}$ in a database. The general attention mechanism perform the following computations:

- Each query vector $Q_{i} = s_{i-1}$ is matched against a database of keys to compute a score value. The matching operation is computed as the dot product of the specific query under consideration with each key vector $K_{i}$

- The score are passed through a softmax operation to generate weights: $\alpha_{q,k_{i}} = softmax(e_{q,k_{i}})$

- The generalized attention is then computed by a weighted sum of the value vectors $V_{k_{i}}$, where each value vector is paired with a corresponding key: $attention(Q, K, V) = \sum_{i} \alpha_{q, k_{i}, v_{k_{i}}}$

Each word in an input sentence would be attributed its own query, key, and value vectors. This allows to essentially compare a hidden vector for output word to each one of hidden vector for an input word and then combine them together to produce a context vector that reflects what are the words of interest in translating next word.

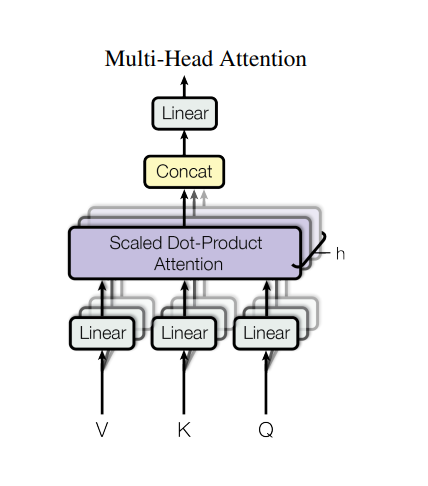

Multi head attention

The multi-head attention is going to predict attention between every position with respect to every other position hence we will have vectors that embed the words in each one of those positions. Then we simply carry out attention computation that treat each word as a query and then find some key that correspond to the other words in the sentence and then take a convex combination of the corresponding value. When we compute attention we look at pairs together in one block and then repeat it multiple times (n times) hence we have n stacks of blocks having the pair of pairs combination.

The Encoder Block

The encoder consists of a stack of N = 6 identical layers, where each layer is composed of two sublayers:

- The first sublayer implements a multi-head self-attention mechanism. Followed by a Add & Norm layer that adds a residual connection of input to output of multihead attentin layer and then normalize it (layer normalization).

- The second sublayer is a fully connected feed-forward network that is applied to every one of the attention vectors. These feed forward networks are used in practice to transform the attention vectors into a form that is expected by the next decoder block. Since each of the attention head are independent of each other hence we can leverage parallelization here and send all the words in a sentence at once and output will be a set of encoded vectors for every word.

The Decoder Block

Before getting into the details of decoder block, let’s touch upon the topic of masked multi-head attention.

Masked Multi-Head Attention

Masked multi-head attention is similar to multi-head attention where some values are masked(i.e. probabilities of masked values are nullified to prevent them from being selected) when decoding an output value. The output value should only depend on previous outputs(not future outputs), hence we mask future outputs. $masked attention(Q, K, V) = softmax(\frac{Q^{T}K + M}{\sqrt{d_{k}}})$ where M is the mask matrix.

Coming back to the decoder block, decoder also consists of a stack of N = 6 identical layers that are each composed of three sublayers:

- The first sublayer receives the previous output of the decoder stack, augments it with positional information, and implements multi-head self-attention over it. While the encoder is designed to attend to all words in the input sequence regardless of their position in the sequence, the decoder is modified to attend only to the preceding words. Hence, the prediction for a word at position can only depend on the known outputs for the words that come before it in the sequence. In the multi-head attention mechanism (which implements multiple, single attention functions in parallel), this is achieved by introducing a mask over the values produced by the scaled multiplication of matrices.

- The second layer implements a multi-head self-attention mechanism similar to the one implemented in the first sublayer of the encoder. On the decoder side, this multi-head mechanism receives the queries from the previous decoder sublayer and the keys and values from the output of the encoder. This allows the decoder to attend to all the words in the input sequence.

- The third layer implements a fully connected feed-forward network, similar to the one implemented in the second sublayer of the encoder.

Furthermore, the three sublayers on the decoder side also have residual connections around them and are succeeded by a normalization layer. Positional encodings are also added to the input embeddings of the decoder in the same manner as previously explained for the encoder.

The layer normalization normalize values in each layer to have 0 mean and unit variance for each hidden unit $h_{i}$ computed as: $ h_{i} = \frac{g \times (h_{i} - \mu)}{\sigma} $ where g is a variable and $ \mu = \frac{1}{H} \sum_{i=1}^{H}h_{i}$ and $ \sigma = \sqrt{\frac{1 \times \sum_{i=1}^{H} (h_{i} - \mu)^{2}}{H}} $ This reduces the covariance shift (gradient dependencies between ach layer) and therefore fewer training iterations.

What are applications of Transformer models?

Transformer models have many applications in natural language processing (NLP) and beyond. One of the most popular Transformer-based models is called BERT, short for “Bidirectional Encoder Representations from Transformers.” It was introduced by researchers at Google and soon made its way into almost every NLP project–including Google Search. BERT refers not just a model architecture but to a trained model itself, which you can be downloaded. It was trained by Google researchers on a massive text corpus and has become something of a general-purpose pocket knife for NLP.

More recently, the model GPT-3, created by OpenAI, has been blowing people’s minds with its ability to generate realistic text. The GPT model architecture consists of a stack of transformer layers. Each transformer layer consists of a multi-head self-attention mechanism that allows the model to attend to different parts of the input sequence simultaneously, as well as a feed-forward neural network that processes the attended inputs. The model is trained using a variant of the language modeling objective, which involves predicting the next word in a sequence given the previous words. The latest version of the GPT model, GPT-3, is one of the most powerful language models to date, with 175 billion parameters. It has been shown to perform well on a variety of natural language processing tasks and can generate highly coherent and realistic text. GPT-3 has been used to generate articles, essays, chatbot responses, and even creative writing like poetry and short stories.

Some of the areas where transformer models are being used are:

- Language Translation: Transformer models have been used extensively in machine translation systems like Google Translate, Bing Translator, and DeepL.

- Language Modeling: Transformer models have been used to create language models that can predict the next word in a sequence, such as the popular GPT-3 model.

- Question Answering: Transformer models have been used to develop question answering systems like Google’s BERT and OpenAI’s GPT-3 that can answer questions based on large amounts of text.

- Text Summarization: Transformer models have been used to summarize long texts into shorter versions, such as the models used in Google News.

- Sentiment Analysis: Transformer models have been used to perform sentiment analysis, which is the process of determining whether a piece of text is positive, negative, or neutral.

- Chatbots: Transformer models have been used to create chatbots that can interact with users in a natural language, such as the OpenAI’s GPT-3 based chatbot.

- Speech Recognition: Transformer models have been used in automatic speech recognition (ASR) systems that can convert spoken language into text, such as the models used in Amazon Alexa and Google Assistant.

- Image Captioning: Transformer models have been used to generate captions for images, such as the models used in Microsoft’s CaptionBot.

- Music Generation: Transformer models have also been used in music generation systems, such as OpenAI’s Jukebox model which generates music based on a given prompt.

Overall, transformer models have revolutionized the field of NLP and have enabled the development of various advanced applications.